![f:id:yohei-a:20181021053908j:image:w360 f:id:yohei-a:20181021053908j:image:w360]()

![f:id:yohei-a:20181021053904j:image:w360 f:id:yohei-a:20181021053904j:image:w360]()

![f:id:yohei-a:20181021053900j:image:w360 f:id:yohei-a:20181021053900j:image:w360]()

Column chunks

Column chunks are composed of pages written back to back. The pages share a common header and readers can skip over page they are not interested in. The data for the page follows the header and can be compressed and/or encoded. The compression and encoding is specified in the page metadata.

https://parquet.apache.org/documentation/latest/

![f:id:yohei-a:20181021052338p:image:w640 f:id:yohei-a:20181021052338p:image:w640]()

![f:id:yohei-a:20181021052335p:image:w640 f:id:yohei-a:20181021052335p:image:w640]()

https://events.static.linuxfound.org/sites/events/files/slides/Presto.pdf

(1) read only required columns in Parquet and build columnar blocks on the fly, saving CPU and memory to transform row-based Parquet records into columnar blocks, and (2) evaluate the predicate using columnar blocks in the Presto engine.

Engineering Data Analytics with Presto and Parquet at Uber

New Hive Parquet Reader

We have added a new Parquet reader implementation. The new reader supports vectorized reads, lazy loading, and predicate push down, all of which make the reader more efficient and typically reduces wall clock time for a query. Although the new reader has been heavily tested, it is an extensive rewrite of the Apache Hive Parquet reader, and may have some latent issues, so it is not enabled by default. If you are using Parquet we suggest you test out the new reader on a per-query basis by setting the .parquet_optimized_reader_enabled session property, or you can enable the reader by default by setting the Hive catalog property hive.parquet-optimized-reader.enabled=true. To enable Parquet predicate push down there is a separate session property .parquet_predicate_pushdown_enabled and configuration property hive.parquet-predicate-pushdown.enabled=true.

https://prestodb.io/docs/current/release/release-0.138.html

![f:id:yohei-a:20181021051335p:image f:id:yohei-a:20181021051335p:image]()

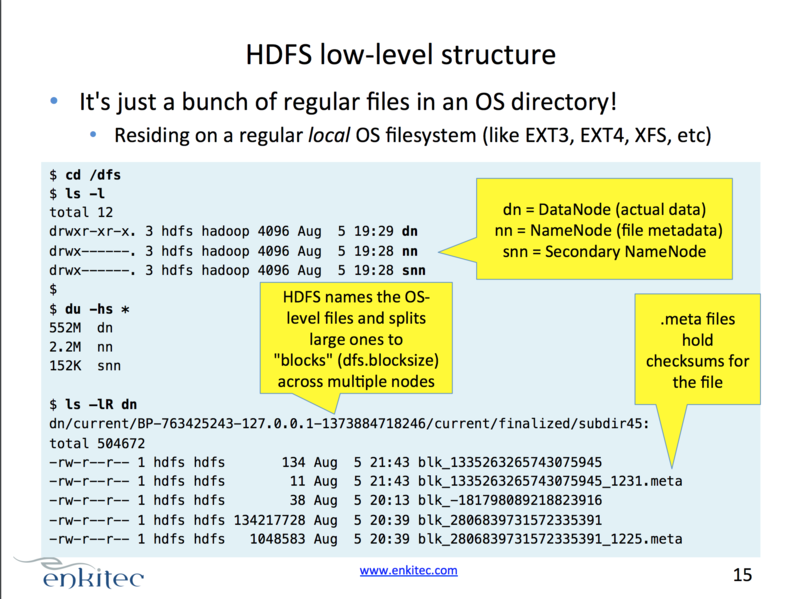

Hadoop Internals for Oracle Developers and DBAs: Strata Conference + Hadoop World 2013 - O'Reilly Conferences, October 28 - 30, 2013, New York, NY

package com.facebook.presto.parquet.reader;

import com.facebook.presto.parquet.RichColumnDescriptor;

import com.facebook.presto.spi.block.BlockBuilder;

import com.facebook.presto.spi.type.Type;

import io.airlift.slice.Slice;

import parquet.io.api.Binary;

import static com.facebook.presto.spi.type.Chars.isCharType;

import static com.facebook.presto.spi.type.Chars.truncateToLengthAndTrimSpaces;

import static com.facebook.presto.spi.type.Varchars.isVarcharType;

import static com.facebook.presto.spi.type.Varchars.truncateToLength;

import static io.airlift.slice.Slices.EMPTY_SLICE;

import static io.airlift.slice.Slices.wrappedBuffer;

publicclass BinaryColumnReader

extends PrimitiveColumnReader

{

public BinaryColumnReader(RichColumnDescriptor descriptor)

{

super(descriptor);

}

@Overrideprotectedvoid readValue(BlockBuilder blockBuilder, Type type)

{

if (definitionLevel == columnDescriptor.getMaxDefinitionLevel()) {

Binary binary = valuesReader.readBytes();

Slice value;

if (binary.length() == 0) {

value = EMPTY_SLICE;

}

else {

value = wrappedBuffer(binary.getBytes());

}

if (isVarcharType(type)) {

value = truncateToLength(value, type);

}

if (isCharType(type)) {

value = truncateToLengthAndTrimSpaces(value, type);

}

type.writeSlice(blockBuilder, value);

}

elseif (isValueNull()) {

blockBuilder.appendNull();

}

}

@Overrideprotectedvoid skipValue()

{

if (definitionLevel == columnDescriptor.getMaxDefinitionLevel()) {

valuesReader.readBytes();

}

}

}

package com.facebook.presto.parquet.reader;

import com.facebook.presto.parquet.DataPage;

import com.facebook.presto.parquet.DataPageV1;

import com.facebook.presto.parquet.DataPageV2;

import com.facebook.presto.parquet.DictionaryPage;

import com.facebook.presto.parquet.Field;

import com.facebook.presto.parquet.ParquetEncoding;

import com.facebook.presto.parquet.ParquetTypeUtils;

import com.facebook.presto.parquet.RichColumnDescriptor;

import com.facebook.presto.parquet.dictionary.Dictionary;

import com.facebook.presto.spi.PrestoException;

import com.facebook.presto.spi.block.BlockBuilder;

import com.facebook.presto.spi.type.DecimalType;

import com.facebook.presto.spi.type.Type;

import io.airlift.slice.Slice;

import it.unimi.dsi.fastutil.ints.IntArrayList;

import it.unimi.dsi.fastutil.ints.IntList;

import parquet.bytes.BytesUtils;

import parquet.column.ColumnDescriptor;

import parquet.column.values.ValuesReader;

import parquet.column.values.rle.RunLengthBitPackingHybridDecoder;

import parquet.io.ParquetDecodingException;

import java.io.ByteArrayInputStream;

import java.io.IOException;

import java.util.Optional;

import java.util.function.Consumer;

import static com.facebook.presto.parquet.ParquetTypeUtils.createDecimalType;

import static com.facebook.presto.parquet.ValuesType.DEFINITION_LEVEL;

import static com.facebook.presto.parquet.ValuesType.REPETITION_LEVEL;

import static com.facebook.presto.parquet.ValuesType.VALUES;

import static com.facebook.presto.spi.StandardErrorCode.NOT_SUPPORTED;

import static com.google.common.base.Preconditions.checkArgument;

import static com.google.common.base.Verify.verify;

import static java.util.Objects.requireNonNull;

publicabstractclass PrimitiveColumnReader

{

privatestaticfinalint EMPTY_LEVEL_VALUE = -1;

protectedfinal RichColumnDescriptor columnDescriptor;

protectedint definitionLevel = EMPTY_LEVEL_VALUE;

protectedint repetitionLevel = EMPTY_LEVEL_VALUE;

protected ValuesReader valuesReader;

privateint nextBatchSize;

private LevelReader repetitionReader;

private LevelReader definitionReader;

privatelong totalValueCount;

private PageReader pageReader;

private Dictionary dictionary;

privateint currentValueCount;

private DataPage page;

privateint remainingValueCountInPage;

privateint readOffset;

protectedabstractvoid readValue(BlockBuilder blockBuilder, Type type);

protectedabstractvoid skipValue();

protectedboolean isValueNull()

{

return ParquetTypeUtils.isValueNull(columnDescriptor.isRequired(), definitionLevel, columnDescriptor.getMaxDefinitionLevel());

}

publicstatic PrimitiveColumnReader createReader(RichColumnDescriptor descriptor)

{

switch (descriptor.getType()) {

case BOOLEAN:

returnnew BooleanColumnReader(descriptor);

case INT32:

return createDecimalColumnReader(descriptor).orElse(new IntColumnReader(descriptor));

case INT64:

return createDecimalColumnReader(descriptor).orElse(new LongColumnReader(descriptor));

case INT96:

returnnew TimestampColumnReader(descriptor);

case FLOAT:

returnnew FloatColumnReader(descriptor);

case DOUBLE:

returnnew DoubleColumnReader(descriptor);

case BINARY:

return createDecimalColumnReader(descriptor).orElse(new BinaryColumnReader(descriptor));

case FIXED_LEN_BYTE_ARRAY:

return createDecimalColumnReader(descriptor)

.orElseThrow(() ->new PrestoException(NOT_SUPPORTED, " type FIXED_LEN_BYTE_ARRAY supported as DECIMAL; got " + descriptor.getPrimitiveType().getOriginalType()));

default:

thrownew PrestoException(NOT_SUPPORTED, "Unsupported parquet type: " + descriptor.getType());

}

}

privatestatic Optional<PrimitiveColumnReader> createDecimalColumnReader(RichColumnDescriptor descriptor)

{

Optional<Type> type = createDecimalType(descriptor);

if (type.isPresent()) {

DecimalType decimalType = (DecimalType) type.get();

return Optional.of(DecimalColumnReaderFactory.createReader(descriptor, decimalType.getPrecision(), decimalType.getScale()));

}

return Optional.empty();

}

public PrimitiveColumnReader(RichColumnDescriptor columnDescriptor)

{

this.columnDescriptor = requireNonNull(columnDescriptor, "columnDescriptor");

pageReader = null;

}

public PageReader getPageReader()

{

return pageReader;

}

publicvoid setPageReader(PageReader pageReader)

{

this.pageReader = requireNonNull(pageReader, "pageReader");

DictionaryPage dictionaryPage = pageReader.readDictionaryPage();

if (dictionaryPage != null) {

try {

dictionary = dictionaryPage.getEncoding().initDictionary(columnDescriptor, dictionaryPage);

}

catch (IOException e) {

thrownew ParquetDecodingException("could not decode the dictionary for " + columnDescriptor, e);

}

}

else {

dictionary = null;

}

checkArgument(pageReader.getTotalValueCount() > 0, "page is empty");

totalValueCount = pageReader.getTotalValueCount();

}

publicvoid prepareNextRead(int batchSize)

{

readOffset = readOffset + nextBatchSize;

nextBatchSize = batchSize;

}

public ColumnDescriptor getDescriptor()

{

return columnDescriptor;

}

public ColumnChunk readPrimitive(Field field)

throws IOException

{

IntList definitionLevels = new IntArrayList();

IntList repetitionLevels = new IntArrayList();

seek();

BlockBuilder blockBuilder = field.getType().createBlockBuilder(null, nextBatchSize);

int valueCount = 0;

while (valueCount < nextBatchSize) {

if (page == null) {

readNextPage();

}

int valuesToRead = Math.min(remainingValueCountInPage, nextBatchSize - valueCount);

readValues(blockBuilder, valuesToRead, field.getType(), definitionLevels, repetitionLevels);

valueCount += valuesToRead;

}

checkArgument(valueCount == nextBatchSize, "valueCount %s not equals to batchSize %s", valueCount, nextBatchSize);

readOffset = 0;

nextBatchSize = 0;

returnnew ColumnChunk(blockBuilder.build(), definitionLevels.toIntArray(), repetitionLevels.toIntArray());

}

privatevoid readValues(BlockBuilder blockBuilder, int valuesToRead, Type type, IntList definitionLevels, IntList repetitionLevels)

{

processValues(valuesToRead, ignored -> {

readValue(blockBuilder, type);

definitionLevels.add(definitionLevel);

repetitionLevels.add(repetitionLevel);

});

}

privatevoid skipValues(int valuesToRead)

{

processValues(valuesToRead, ignored -> skipValue());

}

privatevoid processValues(int valuesToRead, Consumer<Void> valueConsumer)

{

if (definitionLevel == EMPTY_LEVEL_VALUE && repetitionLevel == EMPTY_LEVEL_VALUE) {

definitionLevel = definitionReader.readLevel();

repetitionLevel = repetitionReader.readLevel();

}

int valueCount = 0;

for (int i = 0; i < valuesToRead; i++) {

do {

valueConsumer.accept(null);

valueCount++;

if (valueCount == remainingValueCountInPage) {

updateValueCounts(valueCount);

if (!readNextPage()) {

return;

}

valueCount = 0;

}

repetitionLevel = repetitionReader.readLevel();

definitionLevel = definitionReader.readLevel();

}

while (repetitionLevel != 0);

}

updateValueCounts(valueCount);

}

privatevoid seek()

{

checkArgument(currentValueCount <= totalValueCount, "Already read all values in column chunk");

if (readOffset == 0) {

return;

}

int valuePosition = 0;

while (valuePosition < readOffset) {

if (page == null) {

readNextPage();

}

int offset = Math.min(remainingValueCountInPage, readOffset - valuePosition);

skipValues(offset);

valuePosition = valuePosition + offset;

}

checkArgument(valuePosition == readOffset, "valuePosition %s must be equal to readOffset %s", valuePosition, readOffset);

}

privateboolean readNextPage()

{

verify(page == null, "readNextPage has to be called when page is null");

page = pageReader.readPage();

if (page == null) {

returnfalse;

}

remainingValueCountInPage = page.getValueCount();

if (page instanceof DataPageV1) {

valuesReader = readPageV1((DataPageV1) page);

}

else {

valuesReader = readPageV2((DataPageV2) page);

}

returntrue;

}

privatevoid updateValueCounts(int valuesRead)

{

if (valuesRead == remainingValueCountInPage) {

page = null;

valuesReader = null;

}

remainingValueCountInPage -= valuesRead;

currentValueCount += valuesRead;

}

private ValuesReader readPageV1(DataPageV1 page)

{

ValuesReader rlReader = page.getRepetitionLevelEncoding().getValuesReader(columnDescriptor, REPETITION_LEVEL);

ValuesReader dlReader = page.getDefinitionLevelEncoding().getValuesReader(columnDescriptor, DEFINITION_LEVEL);

repetitionReader = new LevelValuesReader(rlReader);

definitionReader = new LevelValuesReader(dlReader);

try {

byte[] bytes = page.getSlice().getBytes();

rlReader.initFromPage(page.getValueCount(), bytes, 0);

int offset = rlReader.getNextOffset();

dlReader.initFromPage(page.getValueCount(), bytes, offset);

offset = dlReader.getNextOffset();

return initDataReader(page.getValueEncoding(), bytes, offset, page.getValueCount());

}

catch (IOException e) {

thrownew ParquetDecodingException("Error reading parquet page " + page + " in column " + columnDescriptor, e);

}

}

private ValuesReader readPageV2(DataPageV2 page)

{

repetitionReader = buildLevelRLEReader(columnDescriptor.getMaxRepetitionLevel(), page.getRepetitionLevels());

definitionReader = buildLevelRLEReader(columnDescriptor.getMaxDefinitionLevel(), page.getDefinitionLevels());

return initDataReader(page.getDataEncoding(), page.getSlice().getBytes(), 0, page.getValueCount());

}

private LevelReader buildLevelRLEReader(int maxLevel, Slice slice)

{

if (maxLevel == 0) {

returnnew LevelNullReader();

}

returnnew LevelRLEReader(new RunLengthBitPackingHybridDecoder(BytesUtils.getWidthFromMaxInt(maxLevel), new ByteArrayInputStream(slice.getBytes())));

}

private ValuesReader initDataReader(ParquetEncoding dataEncoding, byte[] bytes, int offset, int valueCount)

{

ValuesReader valuesReader;

if (dataEncoding.usesDictionary()) {

if (dictionary == null) {

thrownew ParquetDecodingException("Dictionary is missing for Page");

}

valuesReader = dataEncoding.getDictionaryBasedValuesReader(columnDescriptor, VALUES, dictionary);

}

else {

valuesReader = dataEncoding.getValuesReader(columnDescriptor, VALUES);

}

try {

valuesReader.initFromPage(valueCount, bytes, offset);

return valuesReader;

}

catch (IOException e) {

thrownew ParquetDecodingException("Error reading parquet page in column " + columnDescriptor, e);

}

}

}

package com.facebook.presto.hive.parquet;

import com.facebook.presto.hive.HiveColumnHandle;

import com.facebook.presto.parquet.Field;

import com.facebook.presto.parquet.ParquetCorruptionException;

import com.facebook.presto.parquet.reader.ParquetReader;

import com.facebook.presto.spi.ConnectorPageSource;

import com.facebook.presto.spi.Page;

import com.facebook.presto.spi.PrestoException;

import com.facebook.presto.spi.block.Block;

import com.facebook.presto.spi.block.LazyBlock;

import com.facebook.presto.spi.block.LazyBlockLoader;

import com.facebook.presto.spi.block.RunLengthEncodedBlock;

import com.facebook.presto.spi.predicate.TupleDomain;

import com.facebook.presto.spi.type.Type;

import com.facebook.presto.spi.type.TypeManager;

import com.google.common.collect.ImmutableList;

import parquet.io.MessageColumnIO;

import parquet.schema.MessageType;

import java.io.IOException;

import java.io.UncheckedIOException;

import java.util.List;

import java.util.Optional;

import java.util.Properties;

import static com.facebook.presto.hive.HiveColumnHandle.ColumnType.REGULAR;

import static com.facebook.presto.hive.HiveErrorCode.HIVE_BAD_DATA;

import static com.facebook.presto.hive.HiveErrorCode.HIVE_CURSOR_ERROR;

import static com.facebook.presto.hive.parquet.ParquetPageSourceFactory.getParquetType;

import static com.facebook.presto.parquet.ParquetTypeUtils.getFieldIndex;

import static com.facebook.presto.parquet.ParquetTypeUtils.lookupColumnByName;

import static com.google.common.base.Preconditions.checkState;

import static java.util.Objects.requireNonNull;

import static parquet.io.ColumnIOConverter.constructField;

publicclass ParquetPageSource

implements ConnectorPageSource

{

privatestaticfinalint MAX_VECTOR_LENGTH = 1024;

privatefinal ParquetReader parquetReader;

privatefinal MessageType fileSchema;

privatefinal List<String> columnNames;

privatefinal List<Type> types;

privatefinal List<Optional<Field>> fields;

privatefinal Block[] constantBlocks;

privatefinalint[] hiveColumnIndexes;

privateint batchId;

privateboolean closed;

privatelong readTimeNanos;

privatefinalboolean useParquetColumnNames;

public ParquetPageSource(

ParquetReader parquetReader,

MessageType fileSchema,

MessageColumnIO messageColumnIO,

TypeManager typeManager,

Properties splitSchema,

List<HiveColumnHandle> columns,

TupleDomain<HiveColumnHandle> effectivePredicate,

boolean useParquetColumnNames)

{

requireNonNull(splitSchema, "splitSchema is null");

requireNonNull(columns, "columns is null");

requireNonNull(effectivePredicate, "effectivePredicate is null");

this.parquetReader = requireNonNull(parquetReader, "parquetReader is null");

this.fileSchema = requireNonNull(fileSchema, "fileSchema is null");

this.useParquetColumnNames = useParquetColumnNames;

int size = columns.size();

this.constantBlocks = new Block[size];

this.hiveColumnIndexes = newint[size];

ImmutableList.Builder<String> namesBuilder = ImmutableList.builder();

ImmutableList.Builder<Type> typesBuilder = ImmutableList.builder();

ImmutableList.Builder<Optional<Field>> fieldsBuilder = ImmutableList.builder();

for (int columnIndex = 0; columnIndex < size; columnIndex++) {

HiveColumnHandle column = columns.get(columnIndex);

checkState(column.getColumnType() == REGULAR, "column type must be regular");

String name = column.getName();

Type type = typeManager.getType(column.getTypeSignature());

namesBuilder.add(name);

typesBuilder.add(type);

hiveColumnIndexes[columnIndex] = column.getHiveColumnIndex();

if (getParquetType(column, fileSchema, useParquetColumnNames) == null) {

constantBlocks[columnIndex] = RunLengthEncodedBlock.create(type, null, MAX_VECTOR_LENGTH);

fieldsBuilder.add(Optional.empty());

}

else {

String columnName = useParquetColumnNames ? name : fileSchema.getFields().get(column.getHiveColumnIndex()).getName();

fieldsBuilder.add(constructField(type, lookupColumnByName(messageColumnIO, columnName)));

}

}

types = typesBuilder.build();

fields = fieldsBuilder.build();

columnNames = namesBuilder.build();

}

@Overridepubliclong getCompletedBytes()

{

return parquetReader.getDataSource().getReadBytes();

}

@Overridepubliclong getReadTimeNanos()

{

return readTimeNanos;

}

@Overridepublicboolean isFinished()

{

return closed;

}

@Overridepubliclong getSystemMemoryUsage()

{

return parquetReader.getSystemMemoryContext().getBytes();

}

@Overridepublic Page getNextPage()

{

try {

batchId++;

long start = System.nanoTime();

int batchSize = parquetReader.nextBatch();

readTimeNanos += System.nanoTime() - start;

if (closed || batchSize <= 0) {

close();

returnnull;

}

Block[] blocks = new Block[hiveColumnIndexes.length];

for (int fieldId = 0; fieldId < blocks.length; fieldId++) {

if (constantBlocks[fieldId] != null) {

blocks[fieldId] = constantBlocks[fieldId].getRegion(0, batchSize);

}

else {

Type type = types.get(fieldId);

Optional<Field> field = fields.get(fieldId);

int fieldIndex;

if (useParquetColumnNames) {

fieldIndex = getFieldIndex(fileSchema, columnNames.get(fieldId));

}

else {

fieldIndex = hiveColumnIndexes[fieldId];

}

if (fieldIndex != -1&& field.isPresent()) {

blocks[fieldId] = new LazyBlock(batchSize, new ParquetBlockLoader(field.get()));

}

else {

blocks[fieldId] = RunLengthEncodedBlock.create(type, null, batchSize);

}

}

}

returnnew Page(batchSize, blocks);

}

catch (PrestoException e) {

closeWithSuppression(e);

throw e;

}

catch (RuntimeException e) {

closeWithSuppression(e);

thrownew PrestoException(HIVE_CURSOR_ERROR, e);

}

}

privatevoid closeWithSuppression(Throwable throwable)

{

requireNonNull(throwable, "throwable is null");

try {

close();

}

catch (RuntimeException e) {

if (e != throwable) {

throwable.addSuppressed(e);

}

}

}

@Overridepublicvoid close()

{

if (closed) {

return;

}

closed = true;

try {

parquetReader.close();

}

catch (IOException e) {

thrownew UncheckedIOException(e);

}

}

privatefinalclass ParquetBlockLoader

implements LazyBlockLoader<LazyBlock>

{

privatefinalint expectedBatchId = batchId;

privatefinal Field field;

privateboolean loaded;

public ParquetBlockLoader(Field field)

{

this.field = requireNonNull(field, "field is null");

}

@Overridepublicfinalvoid load(LazyBlock lazyBlock)

{

if (loaded) {

return;

}

checkState(batchId == expectedBatchId);

try {

Block block = parquetReader.readBlock(field);

lazyBlock.setBlock(block);

}

catch (ParquetCorruptionException e) {

thrownew PrestoException(HIVE_BAD_DATA, e);

}

catch (IOException e) {

thrownew PrestoException(HIVE_CURSOR_ERROR, e);

}

loaded = true;

}

}

}